How to fluff out matter

A simple explanation of the why and wherefore of quantum indeterminacy

In the last mailing I mentioned the Serbo-Croatian polymath Roger Boscovich, who in the 18th Century formulated an atomic theory which accounted for the stability of matter in terms of equilibria between attractions and repulsions, to which I added: “much like quantum theory does today.” In this letter I want to (among other things) specify the extent to which this comparison is justified.

A bit of quantum history to begin with.

Quantum physics started out as a rather desperate measure to avoid some of the spectacular failures of classical physics. The story usually begins with the discovery by Max Planck, in 1900, of the law that perfectly describes the radiation spectrum of a glowing hot object. (One of the things predicted by classical physics was that you would get blinded by ultraviolet light if you looked at the burner of your stove.) According to classical theory, a glowing hot object emits energy continuously. Planck took his formula to imply that such an object emits energy in discrete amounts (“quanta”) equal to the product of the radiation frequency f and a new universal constant h now known as “Planck’s constant”: E=h f.

A decade later, in 1911, Ernest Rutherford proposed a model of the atom based on experiments conducted by Hans Geiger and Ernest Marsden. Geiger and Marsden had directed a beam of α-particles (helium nuclei) at a thin gold foil. While most of the particles were deflected by at most a few degrees, a tiny fraction were deflected through angles larger than 90 degrees. In Rutherford’s own words: “It was almost as incredible as if you fired a 15-inch shell at a piece of tissue paper and it came back and hit you.” Rutherford concluded from this that the major part of the atom’s mass must be concentrated in a minute nucleus. The atomic model he proposed, which had electrons orbiting the nucleus more or less as planets orbit a star, was however short-lived. Classical electromagnetic theory predicts that an orbiting electron will radiate away its energy and spiral into the nucleus in less than a nanosecond.

Thus began the search for an atomic model that was stable. To prevent the hydrogen atom from collapsing, Niels Bohr in 1913 postulated that the atom’s angular momentum L can only take values that were integral multiples of Planck’s newly discovered universal constant h divided by 2π. (Angular momentum is to linear momentum what circular or rotatory motion is to motion in a straight line.) With stunning results.

Each type of atom emits electromagnetic radiation at a characteristic set of frequencies. These show up as lines in the spectra of the emitted radiation. By the end of the 19th Century, thousands of spectral lines had been measured and catalogued, yet no one had a clue to their origin. Bohr’s postulate not only predicted that atoms emit and absorb radiation at specific frequencies but also made it possible to calculate the spectrum of atomic hydrogen with phenomenal accuracy.

In the mature quantum theory of 1925/1926, due to Werner Heisenberg (mostly) and Erwin Schrödinger (independently), the “allowed” states of atomic hydrogen are characterized not only by their distinct energies E₁,E₂, E₃, … , and the frequencies fₘₙ = (Eₘ - Eₙ)/h at which the atom can emit or absorb radiation, but also by two other “quantum numbers,” both of which are related to the atom’s angular momentum L: the magnitude of L and a particular component of L (usually taken to be the z component).

It might have been tempting to think of each of these states in the classical sense of the word “state,” as a bunch of possessed properties. But then, why should a state that is fully specified by the values of three physical quantities depend on an arbitrary axis (usually taken to be the z axis)? The answer, once again, lies in the contextuality of quantum mechanics. The three quantities are defined by the manner in which they are measured, and they only exist if they are actually measured. The reason why the relevant component of L depends on a particular axis is that it is defined by the orientation of the apparatus by which it is measured.

So an “allowed” state of atomic hydrogen only exists if its three defining quantities are the outcomes of three measurements. But if it’s not a bunch of intrinsically possessed properties, what is this state when it’s at home, and in what sense does it exist? It is, and only exists as, a predictive tool. It is a mathematical machine with inputs and outputs: you insert the three measured quantities, along with the possible outcomes of whichever physical quantity you intend to measure next, and out pop the respective probabilities with which these outcomes will be obtained.

Now is the time to be more specific about the meaning of “allowed.” The original idea was that only states with the specific energies E₁, E₂, E₃, … could exist. In the mature theory it turned out that these states were stationary, in the sense that the probabilities assigned by them did not change with time. All other states were (mathematically speaking) linear combinations of these states. While a stationary state assigns probability 1 to exactly one of the values E₁, E₂, E₃, …, a non-stationary state assigns non-zero probabilities to at least two of them. What remains true is that a measurement of the atom’s energy will only yield one of these values.

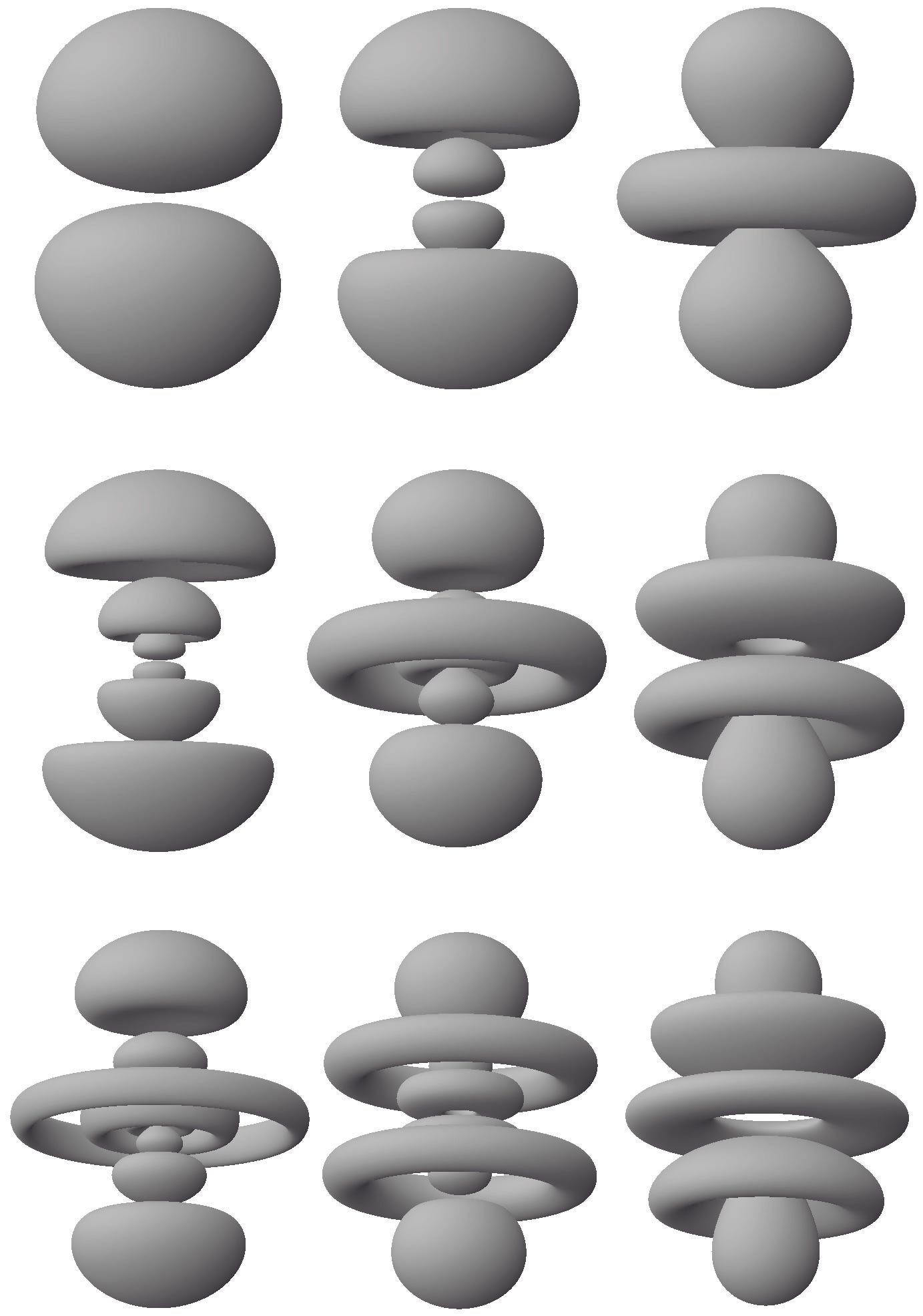

Now suppose we are in a position to measure the position of the hydrogen atom’s single electron relative to the atom’s nucleus. The following image represents the corresponding probabilities (per unit volume) for nine of the atom’s stationary states.

The following image emphasizes the three-dimensional shapes of these “probability clouds” by displaying surfaces at which the probability of detecting the electron has a fixed value.

In a rather obvious way, the electron’s position relative to the nucleus is fuzzy. But this must not be taken literally. What each of these probability clouds represents is not a literally fuzzy position but the probabilities with which the electron would be found in any small volume of space (relative to the nucleus) if the appropriate measurement were made. Where these probability clouds are darker or denser, the probability of detecting the electron is higher.

As Rutherford had concluded in 1911, each atom “occupies” an enormously larger volume of space than its nucleus or any of its electrons (which do not appear to occupy any space). So how is an atom able to occupy as much space as it does without collapsing, and how can it be stable?

Consider again the lowly hydrogen atom. What is at least partly responsible for its “size” —roughly a tenth of a nanometer in its ground state — is the fuzziness of the electron’s position relative to the nucleus (usually a single proton). But being fuzzy (in the above sense) is not enough; the electron’s position must also stay fuzzy. If the electrostatic attraction between the negatively charged electron and the positively charged proton were the only force at work, it would cause the probability cloud associated with this position to shrink. The position itself would become sharper, less fuzzy, and the atom would collapse as a result. To ensure stability, the electrostatic attraction must be counterbalanced by an effective repulsion. And the simplest and single most elegant way of obtaining such a repulsion — which also happens to be the one adopted by Nature — is to let the momentum of the electron (relative to that of the proton) be fuzzy as well.

The following drawing may serve to illustrate this. Above: if the electron’s momentum has a definite value, the fuzziness of the electron’s position does not change as the electron moves from left to right. Below: if the electron’s momentum is fuzzy as well, the electron covers a fuzzy distance between any two given times. The two kinds of fuzziness combine, with the result that the electron’s position grows fuzzier with time. (The reason why the maxima of the curves decrease as their widths increase is that the areas below them represent the probability of finding the electron anywhere at all, which must remain equal to 1.)

The atom thus requires for its stability an equilibrium between an electrostatic attraction (tending to reduce the fuzziness of the electron’s position) and a fuzzy momentum (tending to increase the same). But mere equilibrium is not enough. The equilibrium must also be stable. If the fuzziness of the radial component r of the electron’s position relative to the proton decreases, the mean distance between the two particles decreases, and their electrostatic attraction increases as a result. A stable equilibrium is possible only if the effective repulsion increases at the same time. For this to happen, the mean of the radial component p of the electron’s momentum relative to that of the proton must increase, and this calls for an increase in the fuzziness of p.

A convenient measure of fuzziness is the width of the corresponding probability function, and a convenient way to measure this is the corresponding standard deviation. What I have just said can be stated more succinctly: a decrease in the standard deviation Δr of the probability function for r calls for an increase in the standard deviation Δp of the probability function for p. And conversely: an increase in Δr calls for a decrease in Δp. The simplest way to achieve this reciprocity is to let the product of the two standard deviations have a positive lower limit:

Δr Δp ≧ C h

The right-hand side must be a multiple C of Planck’s constant h because the product of position and momentum happens to be measured in the same units as Planck’s constant. The actual value of C is 1/(4π). This uncertainty relation was first derived by Earle Kennard in 1927. It was then hailed by Heisenberg as the correct mathematical expression of the uncertainty principle, by which he meant the impossibility in principle of simultaneously determining the exact position and the exact momentum of a particle.

While “uncertainty” has become the most commonly used term in the English literature, Heisenberg’s favorite terminology was “inaccuracy relation” (Ungenauigkeitsrelation) or “indeterminacy relation” (Unbestimmtheitsrelation). No matter the term being used, what “fluffs out” matter — what makes atoms and all things made of atoms occupy as much space as they do — is neither a subjective uncertainty about an objective state of affairs nor a practical limitation on the accuracy of measurements. It is a fundamental indeterminacy that reveals itself to us through a fundamental limitation on the predictability of the outcomes of certain simultaneously performed measurements, such as a measurement of the position and a measurement of the momentum of a particle.

Quantum theory thus agrees with Boscovich that the stability of material objects is based on equilibria between attractions and repulsions. What Boscovich could not have foreseen is that the relevant repulsions are not due to classical forces (which act on objects at exact locations by changing their exact momenta) but instead result from an essential fuzziness of relative momenta which, unopposed, causes the essential fuzziness of the corresponding relative positions to increase.

Erratum: In the mailing the value of C was wrongly stated as π/4 = (1/4)π, instead of 1/(4π). Brackets matter!

I am skeptical about all this. I perceive, Bohm's theory is true to reality, in particular, as described in Vedas. Would like to see your response.